A Feedforward Neural Network

Many social protocols are based on identity. A woman offers her seat on a bus to an elderly male. Later, a young man offers his seat to the woman. In each case, the identity of the interacting participants dictated their behaviour according to social convention. Herein is described an experiment where giving neural network based organisms an arbitrarily assigned identity in contentious situations stimulated them to spontaneously develop a protocol to avoid conflict. This was advantageous to the group as a whole.

(Warning: A long discussion follows. Here is a quick summary for those well versed in the subject.)

Hungry Neural Gnats seeking Fungi to eat

In the beginning, a colony of 32 neural network based organisms is confined to a space that is effectively a long corridor that loops back to itself like a squared torus, with 64 food particles evenly distributed throughout. Each organism has a "brain" consisting of a neural network with some predetermined number of input neurons, neurons in the hidden layer, and neurons for output. Random synaptic weights are initially assigned to each neuron of each organism.

The organisms were evolved via a genetic algorithm to wander their world in search of "food". As each morsel of food is consumed, another appears magically elsewhere in the world, such that there is always exactly the same number of food particles available at all times.

At each step, information required to navigate and find food is presented on the neural net's inputs, and the network responds via a pair of output neurons with which it expresses the direction and speed of the next step it wants to take. After several thousand steps in this world have been completed by the colony, they are evaluated according to the total calories consumed by each individual in the genetic algorithm that determines which "genes" (the synaptic weights) will make up the next generation. It is survival of the fittest. A small number of "elites" are carried forward to the next generation exactly as they are. Offspring of the fittest are created via roulette wheel selection. Some of the offspring are mutated. Often some mutations will be beneficial and thus the colony evolves with each generation.

This world in which they live is not without challenges to overcome. The organisms have to first learn how to navigate to food and are in competition with their neighbours for it. They also have to learn to avoid fruitlessly banging their heads against walls which they cannot pass through. To add to the complications, (and to prevent them from piling up on top of each other), this world has a Law: Food consumed in the presence of a nearby competitor provides no nourishment. This sets up an imperative to develop strategies to avoid neighbours when eating in order to be successful.

In human society, situations arise all the time that could lead to confusion or conflict, and we have developed protocols to smooth the way. For example, take two people approaching a door at the same time. Who will enter first? What kind of world would it be if we all just barged ahead regardless? Not very pleasant.

We have developed a notion of "politeness". The second to arrive at the door will often defer to the first. A man will often defer to a woman, a youth will often defer to an elder, etc. It doesn't always work out so easily though. When two peers arrive at exactly the same moment we may end up with the classical comedy where each is saying "After you", or sometimes we will witness rude behaviour.

We line up at the bank, we take turns in conversations and games, and have endless other protocols like saying "please", "thank you" and "excuse me". Though we still haven't quite yet worked out who takes the last cookie on the plate, by and large we can navigate through our daily lives harmoniously, thanks to our protocols.

Some of those scenarios above often depend on some clear differentiation between self and other to determine the appropriate role. Who arrived first and who arrived second. Elder and youth. Male and female. How could I give my organisms some sense of unique identity so that they could know self from other and establish some kind of protocols based on that?

In the original experiment there were 5 inputs upon which were presented the direction and distance to the nearest food particle, the direction and distance to the nearest neighbour, and a special input for "Current Identity". (Later experiments were simplified to just two inputs - discussed far below.)

I adopted a tri-state concept of "Handedness" as a label to distinguish one organism from another during an encounter. Each organism is arbitrarily assigned either "Right-handedness" or "Left-handedness" at the start of each generation, and there are equal numbers of each persuasion. Then as they navigate about the world and encountered competitors, if it happened that they were both the same handedness at the moment of encounter, the first to arrive upon the scene is changed to be the opposite handedness of the other.

These organisms have no memory. Living in the "Eternal Now" they would never know their identity was different one moment to the next. They could, however, carry with them at all times a repertoire of different behaviours that could be triggered for either identity as reported on the neural input.

Since there are an endless number of encounters during a round, this assured that in the end any organism spends an approximately equal amount of time being labeled as either handedness.

If there was no conflict over a piece of food, this input for current identity was set to zero. If there was a conflict, where an organism and its neighbour both happen to find themselves headed for the same morsel, the organism's current handedness was input as 1 for "Left-handed" or -1 for "Right-handed". Thus there were three possible states: Left-handed, Right-handed, and Ambivalent (or ambidextrous, if you prefer.)

Then any given interaction always involved a right-handed organism and a left-handed organism. Each organism could decide how that interaction should play out based on its current handedness if it so chose, but they are given no hint as to what they should do.

What encouraged me to try this was how obvious it appeared to me that if they cooperate they could gain far more points than if they are always simply avoiding each other and "leaving the last cookie on the plate".

My very first experiment was a success. After a few hundred generations during which they learned the basics of navigation, they often would discover a protocol to enable them to take turns when two individuals find themselves in contention over the same bit of food. Thereby all would eat better.

Perhaps it begins with some sort of neural dysfunction in the mutant offspring of a top scorer, where for example, a 0 or 1 on its identity input does not interfere with navigating to food, but a -1 does. Suddenly it could be said, in effect, it is deferring to the competitor when its identity is -1, but in all other situations continues to be a top scorer. If by luck it was also able to pass its genes along to the next generation, its progeny whenever they meet would be all suddenly taking turns, and the group reward would be immediate, substantial, and self-reinforcing.

So here is a hypothesis. Give and take behaviour begins as a genetic defect for the individual(s) that happens to be beneficial to the genome as a whole.

It is easier to imagine this beginning in a situation where organisms do not have a great deal of mobility. Then consider one organism has several offspring that all share the same genetic defect, one that turns out to benefit the group as a whole. That colony might prosper and take over the environmental niche.

In the current experiment, for any food particle in contention, there are always other options not far away. If food is being consumed at a rapid pace, new food particles are continuously popping up around any given organism, so abandoning a food particle doesn't mean going hungry for long. Another soon appears nearby.

Then it is far more efficient to defer immediate reward and be polite according to established protocol even though that means allowing a neighbour to go ahead and eat the food the organism was headed for half the time. In the end, both organisms will require fewer steps to gain more nutrition, and there will be more churn in the food supply in general, benefiting all.

In several experiments, some colonies developed a protocol of their own initiative within a few hundred generations, whereby right-handed organisms would consistently defer to left-handers, and other colonies developed the opposite protocol with equal probability. In yet other colonies, no protocol ever developed, resulting in ill-mannered organisms that were always squabbling over food. These colonies scored significantly lower than civilized colonies (~40% lower group score).

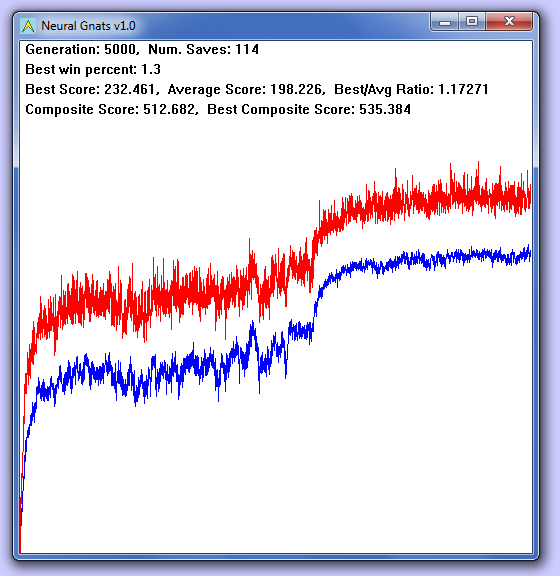

The image below shows the learning curve of one colony. The blue line is the group average score plotted per generation while the red line is the high score plot.

You will note the initial rise as they learn to navigate tends to flatten out once they reach around 330 points or so during the first 3000 generations (to 3/5ths of the way across). You will also observe that the curve is chaotic during this interval. This is due to the way they frequently tend to end up piled atop one another, resulting in considerable variability in scores.

Suddenly at around 3000 generations they "get religion", and start politely taking turns. Their scores begin a rapid rise with a slope equal to the initial climb up from zero. You can see that for the next 2000 generations the graph for the group score (blue) is much smoother, now they are no longer squabbling over the food. Their score soars to 535 points before it stabilizes at the new level.

The Learning Curves: After 3000 generations Neural Gnats get religion

I have developed many previous experiments where neural network based organisms were evolved to wander the screen, looking for "food". This is an easy thing for them to learn, and there are many ways to accomplish this. In the simplest experiment, the direction to the nearest food particle is presented on a single neural input as an offset to the organism's current heading. There are two neurons in the "hidden layer", and two output neurons with which the neural net expresses the direction and speed of the next step it wants to take.

It is like pointing your finger at the ball for the dog. Some dogs just don't get it, and will stare at your finger. Others will look in the direction you are pointing, and go fetch the ball. In the case of the neural net based organisms, only the ones that "get it" will prosper and go on to produce progeny, and subsequent generations will become increasingly proficient at navigating to food.

However, with nothing more than these inputs, it is inevitable that the organisms will tend to pile up in places, finding themselves all in pursuit of the same remaining piece of food in a given locale. Then all piled atop one another, they go on to the next, unaware their tunnel vision does not permit them to see they are surrounded with food enough for all if only they would disperse. This tendency to clump up introduces chaos into their learning curve because simple bad luck may sideline the most proficient.

Watching this scenario play out over and over, I considered how to avoid these pile-ups. Clearly, they would need a little more information about their environment and some system of reward and/or punishment that they might develop the desired behaviour of keeping their distance from each other.

After trying several things, I settled on giving them not only the bearing and distance to the nearest food particle but also the bearing and distance to their nearest competitor. Then I laid down The Law: They would derive no nutrition from any food eaten in the presence of a competitor (within a radius of 20 pixels, or a couple of body lengths).

Soon enough they discovered if they kept a respectable distance from their competitors, they thrived. Those that came too close starved. Now we could call this "social behaviour" of sorts, but it doesn't come near to the remarkable behaviour witnessed in later experiments.

I never even considered the philosophical implications of what they were doing at that point. Rather, noting how their group scores soared once they spread out around the screen to take advantage of all the food offered, next I became obsessed with how they could be modified to be even more efficient.

I made endless experiments in giving them different kinds of information about their environment, like for example, the nearest competitor's current heading as well as its bearing, or the direction of the next nearest food particle as well as the nearest so that they might make a choice between the two, and maybe add to that the direction of the next nearest neighbour as well so they may be better able to plot a course to a food particle away from the crowd.

All these experiments were successful to different degrees, and I had organisms with up to 8 inputs and more. Then I hit upon an idea that made them super efficient. Adding to inputs for food direction and distance, plus nearest neighbour direction and distance, I gave them an input that would signal to an organism when his neighbour was after the same food particle as he. This input would be zero when there was no potential conflict, -1 when a neighbour was after the food particle and was closer to it, and 1 when the organism was closer to the food than his neighbour. Suddenly the scores took off and I had to redesign the presentation of the learning curve and other parameters to allow for a much greater range of scores.

I went on to make doing the math easier for them. Where earlier versions required them to develop some internal representation of the mathematical constant PI and the ability to compute arctangents, I began giving them, and receiving from them, more highly simplified heading information based on the normalized magnitude of angular differences. This no longer required them to develop some internal concept of PI or to do arctangents. Effectively, with this new scheme all they needed to do were simple additions and subtractions, and maybe some scaling.

Their scores continued to soar, but the more efficient I made them, the less interesting they became because each new modification required less imagination on their part. Of course now it was a no-brainer for the organisms. They could navigate with ease. They knew when they could graze at peace, unconcerned about encroaching neighbours, and they knew when there was contention over food, whether it would be more efficient to go ahead after some food particle or to abandon it to a competitor. There was certainly nothing here to analyze philosophically about social behaviour.

It was then I had the idea. Suppose I left it for them to work out some protocol so they could take turns with one staying out of the way while allowing the other to take nutrition from his meal?

How could I give my organisms some sense of unique identity so that they could know self from other and establish some kind of protocols based on that?

My first attempt was to assign each a number, but how to do it so they could compare their number with that of the neighbour, and make some generalized meaning from it? Sparing you the details, any approach I took in this direction failed.

Then I hit upon the solution of "Handedness" as described above. Their current handedness then became one of the pieces of information included among the various inputs to the neural network at each step.

Now that I had a reasonably functioning model that demonstrated social behaviour, I considered what were the minimum inputs necessary. The model I first developed had five inputs, for direction and heading to the food, for direction and heading to the nearest competitor, and a special identity input. Yet I knew these organisms could navigate the world and find food with just one input.

I considered, what if I just gave them, besides the input with heading to food, only a second input for current identity? That input really conveys a lot of information at once. When it is zero, the organism can graze in peace, knowing there are no competitors after their food. When it is signalled, they know there is a contention over the food they were headed for. At the same moment they are also aware of their unique identity. If they choose to flee a conflict, they would know to simply head in the opposite direction to their current heading, or at a minimum, to at least go no further in that direction.

Just to make a clarification, with only two inputs, the neural net-based organisms don't really know much of anything about the world they live in, least of all that they even have neighbours. All they really know is where the food is, and that it is always nutritious when the identity input is zero. When the identity input is signalled, however, they learn that the food may be gone when they get to it or it may provide them with no nourishment when they eat it. Of course, even this clarification is an anthropomorphism. Not very scientific, but too much fun to resist at times.

As it turned out, this information is all that is required to spontaneously give rise to the same turn-taking behaviour that was first seen in the more complex model. Then all that is required is a total of 6 neurons: Two for the input layer, two for the hidden layer, and two for the output. Because the model is so simple, it evolves very quickly. Within about 10,000 generations it has usually reached its peak.

I conducted a final experiment with the two-input model where no longer was the identity input set to zero when there was no contention, and therefore handedness no longer served as an alert to contention. As the organisms wandered about the world, all they ever knew was the direction to the nearest food particle and their handedness of the moment. Since the organisms have no memory, they would never even notice the moment their handedness changed, so had no way of knowing there were competitors in contention for their food.

Even under this condition they developed a protocol, though the reward was small - 20 to 30 points above what they had achieved without any protocol. However, the reduction of variability of the group scores could clearly be seen in the smoothing of the learning curve.

I ran three colonies under this regime. One never demonstrated any benefit from handedness whatsoever. There were no differences in the navigational skills of right and left-handers.

The second colony averaged a few points higher and showed slightly less chaos in the group scores. Close observation revealed that when they were left-handed, they tended to sort of side-swipe the food particles whereas the right-handers attacked the food head-on. This slightly reduced the tendency to clump as it increased the probability that each might see a different particle of food on the next step and therefore their courses may diverge.

The third colony, however, clearly found an advantage in handedness. After about 5,000 generations the right-handers began skipping meals. They would often drive right on past a food particle in a sweeping arc as if it wasn't there, turning down maybe as much as every second or third opportunity to eat. On the other hand, the left-handers were swift and agile, going straight for the food every time. The group score jumped up by 20 points or more and the plot for the group scores smoothed out noticeably as the tendency to clump was reduced to a minimum. However, considerable variability in top scorer's scores continued as before.

It turned out the protocol they developed was not stable. After a few thousand generations, it fizzled out, was re-established a couple of times, and finally lost again. They had never rediscovered their protocol by the time I ended the experiment at around 20,000 generations.

What is needed is research into the moment the reported behaviour arises. What conditions are necessary for it to come about? At the moment all that is understood is that this behaviour has a brief window within the first few thousand generations to develop, while there is still sufficient plasticity in the neural networks. If it doesn't arise during this time, it never will, as confirmed by tests run to 80,000 generations.

You can download the application -here- Just unzip the package and read the ReadMe file. I am also making the source code available as an MSVC2008 project so that you may verify the behaviour reported for yourself.